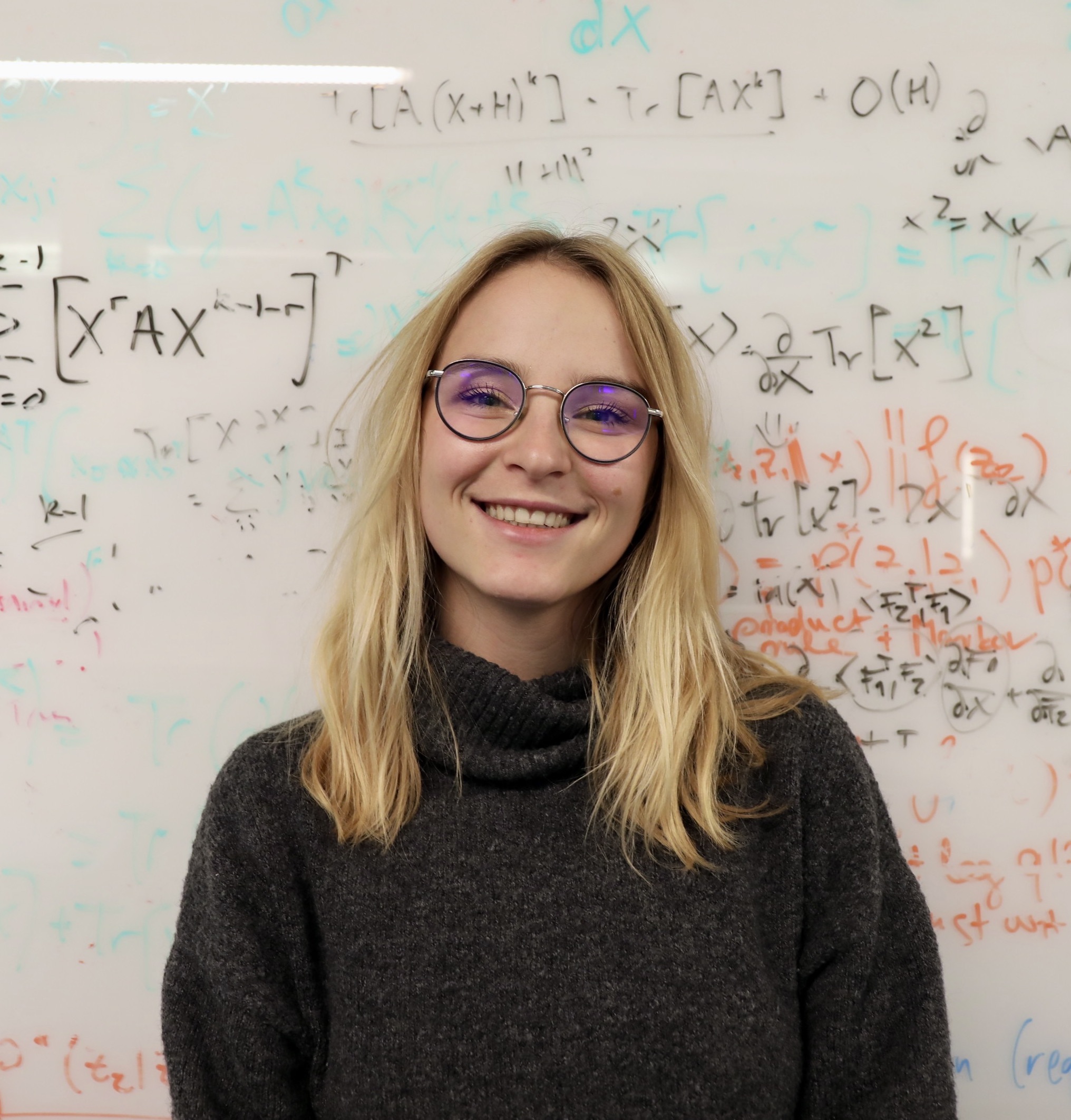

Clémentine Dominé

I am a Postdoctoral Researcher at ISTA, supported by the Cluster of Excellence (CoE) Fellowship, where I work with Pr. Marco Mondelli and Pr. Francesco Locatello.

Research

My research lies at the intersection of theoretical neuroscience and theoretical machine learning. Broadly, I aim to understand how the brain learns and builds representations to carry out complex behaviors—such as continual, curriculum, and reversal learning, as well as the acquisition of structured knowledge. I develop mathematical frameworks rooted in deep learning theory to describe adaptive and complex learning mechanisms, addressing questions that bridge machine learning and cognitive neuroscience.

Trainning

I completed my PhD at the Gatsby Computational Neuroscience Unit under the supervision of Andrew Saxe and Caswell Barry. Before that, I earned a degree in Theoretical Physics from the University of Manchester, including an exchange at the University of California, Los Angeles.

Community

Beyond my research, I am deeply involved in the academic community. I co-organize the UniReps : Unifying Representations in Neural Models workshop at NeurIPS , an event dedicated to fostering collaboration and dialogue between researchers working to unify our understanding of representations in biological and artificial neural networks. 🔵🔴

Mentoring and collaboration

Interested in working together on questions at the intersection of biological and artificial intelligence? I especially welcome students from underrepresented groups in cognitive science, neuroscience, and AI. Mentorship and collaboration are central to my work—feel free to reach out!

News

| Dec 1, 2025 | Heading to NeurIPS 2025 in San Diego for the UniReps workshop! Can’t wait to connect and discuss all things representation learning 🔵🔴 |

|---|---|

| Aug 1, 2025 | I’m excited to share that I’ll be joining Marco Mondelli and Francesco Locatello’s labs as a postdoc at ISTA Vienna starting next year. Looking forward to exploring exciting questions at the intersection of machine learning theory, representation learning, and beyond! |

| May 20, 2025 |

Got two papers accepted at ICML (2025) 🎉 🍊

|

| May 11, 2025 | 🚀 We’re excited to launch the ELLIS UniReps speaker-series, a monthly event exploring how neural models—both biological and artificial—develop similar internal representations, and what this means for learning, alignment, and reuse. Each session features a keynote by a senior researcher and a flash talk by an early-career scientist, fostering cross-disciplinary dialogue at the intersection of AI, neuroscience, and cognitive science! 🔵🔴 |